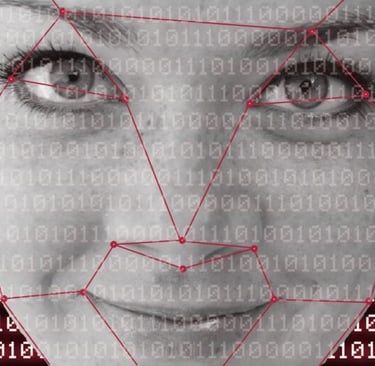

Artificial intelligence at the service of deception: deepfakes and vishing.

Descripción de la publicación.

LT REDES

3/11/20255 min read

The new era of social engineering?

A 2020 report from the AI and Robotics Center of the United Nations Interregional Crime and Justice Research Institute identified trends in clandestine forums related to the abuse of artificial intelligence (AI) or machine learning, which could gain significant momentum in the near future. Among these trends, the impersonation of humans on social media platforms stood out. Today, that future is already a reality, and everything that could be anticipated is happening and has exceeded expectations.

At the intersection of technological innovation and criminal tactics, concerning phenomena such as the use of deepfakes and vishing (voice phishing) emerge.

Both methods are modern versions of impostor scams (or, as they are also known in Uruguay, "the uncle's tale"). It is a tactic in which the scammer impersonates a person, usually someone very close to the victim, to deceive them in search of money.

In 2022, scams of this type were the second most reported category of fraud in the United States, with losses amounting to 2.6 billion dollars.

We could consider these scams as modern uses of social engineering. According to Knowbe4, the world's first security awareness and phishing training platform, social engineering is defined as "the art of manipulating, influencing, or deceiving the user to take control of their system."

What role does AI play in this framework? In recent years, cybercriminals have perfected the use of AI to create more credible and agile attacks, increasing their chances of making a profit in a shorter period. At the same time, it allows them to target new victims and develop more innovative criminal approaches while minimizing the chances of being detected.

Creating False Realities: Deepfake and Vishing

The term "deepfake" encapsulates the fusion of two concepts: "deep learning" and "fake." It refers to a sophisticated AI-driven technique that enables the creation of multimedia content that appears authentic but is actually fictitious.

Using deep learning algorithms, deepfakes can overlay faces in videos, alter a person's speech in audio, and even generate realistic images of individuals who never existed.

This concept dates back to the 2010s. This concept dates back to the 2010s. One of the first deepfake videos to circulate on the internet was "Face2Face," published in 2016 by a group of researchers from Stanford University who aimed to demonstrate the technology's ability to manipulate facial expressions.

Using two resources—the facial recording of a source actor (a role played by the researchers) and that of a target actor (such as presidents like Vladimir Putin or Donald Trump)—the academics managed to reconstruct the target actors' facial expressions with those of the source actors, in real-time, while maintaining synchronization between voice and lip movements.

Another highly significant deepfake was a video of former President Obama, in which we hear him say: “We are entering an era in which our enemies can make anyone say anything at any time.” And, in fact, the reality was that it was not Obama who spoke those words, but his deepfake.

For its part, "vishing," a shorthand for "voice phishing," represents an intriguing and dangerous variant of classic phishing. Instead of sending deceptive emails, scammers make phone calls to trick their victims. Using AI-based voice generation software, criminals can mimic the tone, timbre, and resonance of a voice from an audio sample as short as 30 seconds—something they can easily obtain from social media.

Two cases that set off the alarms

Since these early examples, deepfake technology has experienced rapid advancement and widespread dissemination in recent years, to the point that it has even caught the attention of the FBI.

In early 2023, the U.S. agency issued a warning after noticing an increase in reports of fake adult videos "starring" victims, created using images or videos that criminals had obtained from their social media accounts.

Live deepfake through a video call

In this context, over the past year, Chinese authorities have intensified surveillance and tightened enforcement following the revelation of a fraud scheme perpetrated using AI.

The incident took place on April 20, 2023, in the city of Baotou, in the Inner Mongolia region. A man surnamed Guo, an executive at a technology company in Fuzhou, Fujian province, received a video call via WeChat, a highly popular messaging service in China, from a friend who was asking for help.

The perpetrator used AI-powered face-swapping technology to impersonate the victim's friend. Guo's "friend" mentioned that he was participating in a bidding process in another city and needed to use the company’s account to submit a bid of 4.3 million yuan (approximately USD 622,000). During the video call, he promised to make the payment immediately and provided a bank account number for Guo to complete the transfer.

Without suspecting anything, Guo transferred the full amount and then called his real friend to confirm that the transactions had been completed successfully. FThat was when he got an unpleasant surprise: his real friend denied having had a video call with him and, even less, having requested any money.

AI-generated fake voices

Regarding vishing cases, in 2019, a media outlet reported for the first time on an AI-powered voice fraud case. The Wall Street Journal reported on a scam in which the British CEO of an energy company was deceived for the sum of 220,000 euros.

These individuals managed to create a voice so similar to that of the German parent company's boss that none of his colleagues in the UK were able to detect the fraud. According to the company's insurance firm, the caller claimed that the request was urgent and instructed the CEO to make the payment within an hour. The CEO, upon hearing the familiar subtle German accent and voice patterns of his boss, did not suspect anything.

It is presumed that the hackers used commercial voice-generation software to carry out the attack. The British executive followed the instructions and transferred the money, which was quickly followed by the criminals moving the funds from the Hungarian account to various locations.

How can we improve our online security?

The evolution of technology in recent years challenges the authenticity of images, audio, and videos. Thus, it becomes essential to strengthen precautions regarding the ways we communicate remotely, through any modality.

As we have seen, social engineering primarily targets people. For this reason, the primary security measure to avoid falling victim to such attacks should focus on user actions.

When receiving calls or messages with requests that seem unusual, even if they come from close contacts and present a credible story (whether through a frequent or unfamiliar communication channel), we should question them and remain skeptical. A recommended practice is to ask personal questions on the spot, to which only that person would know the answer.

But users are not the only ones who can be deceived by vishing or deepfakes; facial and voice authentication systems can also be compromised. For several years, the ISO 30107 standard has existed, establishing principles and methods for evaluating Presentation Attack Detection (PAD) mechanisms—those designed to counterfeit biometric data, such as voice or facial recognition.

Daniel Alano, an information security management specialist at Isbel, emphasized that one way to improve our online security is to use applications certified with ISO 30107. Alano explained that "it is the standard used to measure whether one is vulnerable to identity spoofing attacks," although he warned that it is not infallible.

If you want to dive deeper into cybercrime stories, we invite you to listen to Malicioso, our podcast about the cyberattacks that paralyzed the world.

Uruguay (HQ)

Paysandú 926

CP 11100

Montevideo

Tel: +598 2902 1477

© 2025 Isbel S.A., a brand Quantik®

Puerto Rico

República Dominicana

Av. Ana G. Méndez 1399, km 3

PR 00926

San Juan

Tel: +1 (787) 775-2100

Carmen C. Balaguer 10

El Millón, DN

Santo Domingo

Tel: +1 (809) 412-8672

Follow us